Outages: Difference between revisions

No edit summary |

No edit summary |

||

| (58 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== 02/11/2015== | == 2024-02-27 == | ||

'''Affected:''' All services | |||

'''Disruption length:''' 30 minutes | |||

'''Service:''' Web only | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' None, only websites affected | |||

We detected unusual activity on our ticket system, and discovered a flaw which could allow an attacker to view the last 10 items that an avatar had received (whether through purchases or bought for them as a gift). Immediately after detecting the issue, the websites were taken offline while a fix was implemented. The downtime was 30 minutes. | |||

No other information about customers was disclosed by this defect. | |||

== 2020-11-07 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 16 hours 13 minutes | |||

'''Service:''' Full outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' Most transactions recovered. | |||

At 11:07PM SLT on Saturday, the datacentre complex which hosts our servers experienced what they called a "power incident", preventing our servers from communicating with each other over the internal network. | |||

Initially the technicians hoped that the switches just needed to be rebooted, but after diagnosis it transpired that a significant amount of equipment needed to be replaced. Some components were not available, and due to COVID the datacentre are running a skeleton crew, so the repair effort was slow. | |||

All of our servers remained powered on during the outage, but were unable to communicate with each other which prevented the API servers from accessing the database, thereby rendering all of our services offline. | |||

Service was restored at 3:20PM SLT on Sunday, unfortunately making this the longest period of downtime we've ever experienced. When the network was restored everything immediately started working, with the exception of one of our MongoDB nodes which was automatically taken out of rotation. This node has now been fully restored. All outstanding transactions have now finished processing. Anything which is still missing can be safely refunded / adjusted / accounted for. | |||

We have redundancy throughout our system, and we can lose half of our servers and still remain online, but unfortunately we are entirely dependent on the private internal network and since it's a single point of failure, I'll be talking with the datacentre about ways to avoid situations like this in the future. | |||

Our infrastructure has been extremely reliable in the last few years, with the last significant outage dating back to 2018. We apologise for the inconvenience caused by this outage, but we are confident that we can continue to deliver an exceptionally reliable service going forward. | |||

== 2018-11-25 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 7 hours 45 minutes | |||

'''Service:''' Full outage | |||

'''Fault status page:''' None yet | |||

'''Lost transactions:''' None - All transactions recovered. | |||

Services went down at approximately 8:15pm on 2018-11-24 and weren't recovered until 4am. | |||

This incident appears to have been caused by a MongoDB bug. All three of our Mongo servers crashed simultaneously as WiredTigerLAS.wt suddenly ballooned from 4kb to 800gb. | |||

This does appear to be a rare but known issue with MongoDB ( https://goo.gl/A7msRf ) and we're working with the developers to stop this from happening again. | |||

Our off-grid transaction processor has done its job and is restoring all transactions that occurred during this period. | |||

I'm sincerely sorry for the disruption caused by this incident. | |||

== 2018-11-09 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 4 hours 15 minutes | |||

'''Service:''' Full outage | |||

'''Fault status page:''' None yet | |||

'''Lost transactions:''' None - All transactions recovered. | |||

At approximately 10:45PM on the 9th of November, 2018 (Second Life Time), our vRack configuration at the datacenter got corrupted and two of our servers got isolated. | |||

The vRack is a "virtual rack" system which connects all our servers together via a virtual internal network. We rely on it for transmission of sensitive information between our 6 core dedicated servers. | |||

This caused a total outage which lasted until 3 AM when we were able to fix the broken configuration, an outage of four hours and 15 minutes. | |||

The hosting company is still investigating what caused our vRack configuration to corrupt. We will post further information here when we have it. | |||

== 2018-05-26 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 45 minutes | |||

'''Service:''' Full outage | |||

'''Fault status page:''' http://travaux.ovh.net/?do=details&id=31831 | |||

'''Lost transactions:''' None. All transactions recovered. | |||

A fault at our host's datacenter caused an outage this morning for 45 minutes, starting at 10:12pm and ending at 10:57pm SLT. Service was restored automatically once the fault was fixed by the datacenter. OVH status ticket: http://travaux.ovh.net/?do=details&id=31831 | |||

== 2018-04-28 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 2.5 hours | |||

'''Service:''' Full outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' None. All transactions recovered. | |||

At approximately 10:11 PM on the 28th of April, 2018, one of our database servers went offline, causing our website access to be disrupted. The failover kicked in and kept in world service alive, but at 11:06 PM the failover database server went offline too. | |||

Visitors to our websites received a message stating that we were "heavily overloaded" - however this wasn't the case, this is just the generic message that the website code shows when it can't connect to the database. | |||

Service was restored at 01:36 AM. | |||

The cause of this is rather embarrassing, but we operate a policy of full transparency so we owe you the honest truth. | |||

Last month we acquired two new massive servers to power our database, "Grunt" and "Sandman". However, when setting up the machines, I completely neglected to set up automatic billing - so basically the servers were unpaid and were suspended. | |||

I am truly sorry for this outage, it shouldn't have happened - it's never happened before and will not happen again! | |||

~Casper | |||

== 2017-06-14 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 4 hours | |||

'''Service:''' Full outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' None, though some may have not appeared on the sales pages. | |||

At approximately 9:30pm SL time on the 14th of June, 2017, we experienced an outage which impacted all of our servers. | |||

The root cause of the outage was severe packet loss on our internal vRack (internal routing between our servers). This resulted in file locking issues on our NFS storage cluster - file locks were not released, causing all of our services to grind to a halt. At around 1:30am, service was restored by restarting the system. | |||

The NFS file locking issue also, unfortunately, caused a few vendors to revert to the default profile. As a result of the kind efforts of many of our users, we were able to find the cause of this and correct the logic issue. | |||

The use of NFS isn't ideal, but so far has been the only thing which has been capable of keeping up with our load. | |||

As a result of this outage, we're accelerating our deployment schedule for the next-gen casperdns service. This involves deployment of the new storage cluster code we've been working on since March. | |||

Please see [[Project Spectre]]. | |||

We sincerely apologise for any inconvenience caused. Please know that we are very aware how frustrating these outages are. We're working to bring you a much more reliable platform. | |||

== 2017-05-11 == | |||

'''Affected:''' CasperVend only, only some customers | |||

'''Disruption length:''' 25 minutes | |||

'''Service:''' Partial outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' None | |||

At approximately 18:45 SL time on the 11th of May, 2017, one of our servers experienced a kernel panic. The problem was noticed by datacenter technicians and full service was restored at 19:10. | |||

Total downtime was 25 minutes. | |||

Unfortunately, however, any vendors which had reset during this period were stuck on an offline profile, and were only fixable with a reset (or a region restart). This is why some were still reporting issues after the service came back online. | |||

I'm looking into why the vendors got stuck in the offline profile, this shouldn't have happened - they are supposed to automatically re-establish themselves. | |||

I will post an update here when this has been fully investigated. | |||

I apologise for any inconvenience caused. | |||

== 2017-04-26 == | |||

'''Affected:''' All services except CasperSafe | |||

'''Disruption length:''' 29 minutes | |||

'''Service:''' Partial outage | |||

'''Fault status page:''' http://status.ovh.co.uk/?do=details&id=14548 | |||

'''Lost transactions:''' None | |||

At 7:50AM SLT on the 26th of April, 2016, OVH (our hosts) suffered a power glitch which severed our database servers from the rest of the network. Both the primary and the secondary database servers were affected. | |||

Power was shortly restored at 8:06 for just two minutes, before going down again. | |||

Service was finally restored at 8:21AM SLT. | |||

Total downtime 29 minutes. | |||

Note that our servers themselves did not lose power, only some intermediate networking equipment. | |||

No transactions should have been lost during this incident, as our transaction servers remained online. | |||

== March 2017 Chaos (aka "the month that Casper broke SL")== | |||

We had two large outages during March 2017, and one smaller one. They were all somewhat (but not directly) related. | |||

Please know that I completely understand that you depend on us, and I give this message with humility, respect and gratitude to our loyal customers, friends in the community, and particularly our support volunteers who pulled all-nighters during both of the extended outage periods, staying awake all night to try to answer questions and to make sure that our affected customers were not ignored. I owe you all a great deal. | |||

I have given a technical description of the issues below, however, for those who are not so technical, it runs down as follows: | |||

* We were attacked on the 2nd/3rd of March by someone unknown. | |||

* Some of the fixes provided by the datacentre malfunctioned and caused the extended downtime on the 15th/16th and 17th of March. | |||

Please know that I am not trying to shift blame, only give you an accurate description of the problems. I accept full responsibility for the failures. | |||

That being said, this kind of thing does not happen a lot. You may not have heard about outages from similar operators in Second Life, but the fact is that they generally do not publish them, as we do. I believe in openness. | |||

I would like to personally apologise for the disruption, and I want to make it very clear that I am committed to doing better in the future. I know that you rightly expect it. | |||

~Casper | |||

<span style="color:red">'''Important''': Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.</span> | |||

== 2017-03-17 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' <1 hour | |||

'''Service:''' Partial outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' None reported | |||

At approximately 3:30AM SLT on the 17th of March, an issue identical to the 2017-03-15 outage struck. This time we were able to find and fix the fault while it was still present. | |||

You are all owed an explanation, so I will try to be as thorough as possible. | |||

During the DDoS attack on the 2nd of March, we made many configuration changes in order to bring things back online as soon as we could. The attackers were being persistent and malicious, so we had to do what we could to mitigate. Part of this was implementation of OVH's global IP firewall (we have previously only used rented cisco firewalls, but these were being overloaded). | |||

Following the attack, CasperLet had some lag and reliability issues. We traced this to an issue with DNS lookups failing on Badwolf, one of the servers that power CasperLet, and further, we found that it was the OVH global IP firewall which was causing these DNS lookups to fail. No problem, we disabled the firewall on Badwolf and reported it to OVH engineers. The other servers were still firewalled, but had no such DNS lookup problems. | |||

The cause of this latest outage, and the outage on the 15th, was that this problem "spread" to the other servers, which were still using the OVH firewall. The firewall configurations for the servers are set to explicitly permit UDP traffic on port 53 (DNS), so this is some stupid bug that has been introduced into the OVH firewall. | |||

We have now disabled the global IP firewall with OVH and implemented our own measures to protect our servers instead. | |||

I have had a very heated discussion with OVH today about this. They refuse to accept that their firewall is malfunctioning, despite the very clear evidence that it is. I am not happy. I will update this post once they have provided a satisfactory answer. | |||

<span style="color:red">'''Important''': Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.</span> | |||

== 2017-03-15 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' ~8 hours | |||

'''Service:''' Total outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' CasperVend: 5% CasperLet: 5% (v1.40 only) | |||

At approximately 17:50 SLT on the 15th of March, our services became unreliable and soon went down entirely. | |||

This (as with the previous downtime on the 2nd) occurred while I was away from home and just after I went to bed. This lead many to suspect that it was another DDoS attack, however, this was not the case. | |||

When I awoke at approximately 1am SLT, I immediately investigated but found that the original cause was already gone (there were some contention issues which were fixed by a server reboot). Because of this, I assumed that the issue was triggered by a power outage in the same rack that our servers are in, as announced on the OVH status page. | |||

However, this was not genuinely the cause. We discovered the real cause during the next downtime (see above). | |||

<span style="color:red">'''Important''': Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.</span> | |||

== 2017-03-02 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' ~12 hours | |||

'''Service:''' Total outage | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' CasperVend: 30% CasperLet: 50% | |||

On the 2nd of March at around 10:30am PDT, our services started to become unstable. I (Casper) was notified, but wasn't at my desk. I was able to restore service by restarting the servers. This happened a few more times, causing minimal disruption and lasting 20 minutes or so. I finally got to my desk at around 4pm SLT, and checked the servers to make sure they were healthy. Everything seemed fine, but I wasn't able to see what actually caused the issues. | |||

It turns out that these small pockets of disruption were testing runs by attackers. | |||

At approximately 17:45 SLT, a sustained DDoS attack began, taking our services offline completely. This was not a usual DDoS attack (those are now completely mitigated by our hosting provider automatically), but it was something known as a [https://www.youtube.com/watch?v=XiFkyR35v2Y slow loris] attack. This type of attack is unusual, because our servers were operating just fine, with low load, and no obvious resource issues, so no alarms were triggered and the datacentre technicians were not alerted. Unfortunately, this occurred just after I had gone to bed (which was likely not a coincidence, but that is merely speculation). | |||

Here is the timeline: | |||

[17:45 SLT] - Attack began | |||

[~19:00 SLT] - Due to a lack of information, it was GUESSED that the outage was related to some OVH issues, but this was later found to be unrelated. | |||

[00:30 SLT] - Casper woke and immediately began investigating | |||

[00:45 SLT] - Casper began work to separate the CasperVend and CasperLet sites away from our inworld systems, so that our in-world stuff can get back online. | |||

[01:00 SLT] - Deliveries start to trickle through | |||

[01:20 SLT] - Attack vector shifts to target the webservers rather than the PHP scripts. Deliveries stop again. | |||

[02:30 SLT] - Blocked all IP addresses except for Second Life IPs. All inworld systems back online. Websites offline to protect other systems. | |||

[03:30 SLT] - Attack vector shifts to combined DNS amplification and SYN-flood attack against our public IPs. OVH anti-ddos triggers and vacuums up the traffic, but also blocks a lot of legit traffic (partial disruption) | |||

[05:12 SLT] - With the help of OVH engineers, we started to bring services back online despite the ongoing attack, starting with CasperLet | |||

[05:27 SLT] - CasperVend back online | |||

- By this stage, our inworld services were working again. Total downtime 12 hours - | |||

[06:00 SLT] - The websites were brought back online | |||

[07:00 SLT] - We completed recovery of lost transactions | |||

[08:34 SLT] - Websites go down again after another attack vector shift. Inworld services remain online and protected. | |||

[10:00 SLT] - We manually reboot our servers to apply some kernel and software patches to mitigate the new attack vector. | |||

[10:11 SLT] - All services restored | |||

[11:47 SLT] - "All clear" announced | |||

Even after the "All Clear" was announced, the attack continued, but wasn't able to penetrate our newly configured defences. The attack stopped at roughly 2pm SLT. | |||

== 2016-06-12 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' ~4-6 hours | |||

'''Service:''' Severely delayed transactions | |||

'''Fault status page:''' N/A | |||

'''Lost transactions:''' CasperVend: None. CasperLet: None (if up to date). | |||

At approximately 00:29 SL Time on the 12th of June, 2016, one of our storage servers ran out of inodes. While the machine still had 60% free disk space, there were effectively too many files (about 6.5 billion), and this prevented any further writes. Unfortunately, since we weren't monitoring inode count (just disk space) no alert or alarm was triggered and our failover did not kick in. The other servers did their intended job and saved all their received transactions locally, so zero transactions were lost, they were just severely delayed. | |||

We were able to start diagnosing the issue at approximately 04:30 SL Time, and by 05:00 we had restored partial service. However, the service was not fully restored until around 6:30AM. | |||

The all clear was given at 8:15 AM. | |||

We sincerely apologise for this outage, and we are taking steps to make sure it never happens again. We hope that our complete transparency regarding this issue, coupled with the fact that our backup systems ensured your transactions were recovered, will reinforce the fact that we are absolutely committed to ensuring that you can rely on our service. | |||

== 2016-02-11 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 10 minutes | |||

'''Service:''' Total outage | |||

'''Fault status page:''' http://status.ovh.net/?do=details&id=12169 (http://travaux.ovh.net/?do=details&id=16568) | |||

'''Lost transactions:''' CasperVend: None. CasperLet: None (if up to date). | |||

At approximately 7:55 on the 11th of February, Russian ISP GlobalNet.ru issued a faulty BGP announce (a BGP leak). | |||

Thanks to one of our users for very eloquently describing: "BGP = Border Gateway Protocol. it's how routers of neighboring networks advertise which routes are most efficient. something advertises the wrong route, is like Google Maps telling everyone to skip the freeway and use an alley instead." | |||

This affected thousands of hosts around the internet (basically, anybody who peers with GlobalNet, which is a lot of people). | |||

However, our datacentre was the first to respond to the incident, and we were back online within a few minutes. | |||

== 2016-02-10 - Planned maintenance == | |||

We shut down for approximately 10 minutes at 4:30AM (Second Life Time) on the 10th of February. | |||

This was a planned maintenance window, with two goals: | |||

* To apply some kernel patches to help mitigate DDoS attacks in the future | |||

* To test the new CasperLet failsafe mechanism in a controlled manner. | |||

The maintenance completed successfully. | |||

== 2016-02-08 == | |||

'''Affected:''' All services | |||

'''Disruption length:''' 6 hours | |||

'''Service:''' Severely degraded | |||

'''Fault status page:''' N/A - Internal | |||

'''Lost transactions:''' CasperVend: None. CasperLet: All transactions lost during this period - see information below. | |||

Short version for technophobes: We were attacked. Nothing was compromised, we are secure, they just overloaded us. We've implemented fixes to make sure this doesn't happen again, and we've already fixed the problem which meant CasperLet transactions were lost. Sorry for the inconvenience. | |||

'''Now for the geeky stuff''' | |||

At approximately 6:30PM Second Life time, our servers were hit with a DDoS attack. Unfortunately, the attack was undetected by the technicians at the datacentre (I'll explain why a bit later), and it occurred at a time when myself and my staff were not available. | |||

I discovered the issue at 1AM Second Life time, and since the traffic was all coming from the same subnet, I was able to block the attack at the firewall very quickly, and bring our services back online. | |||

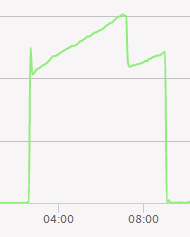

This attack was very unusual in a few ways. Firstly, the MTRG graph showed that the amount of traffic peaked at a ludicrous 785gbps, and had a sustained average of around 600gbps. This would make the attack one of the largest ever recorded in internet history, and while technically possible (the datacentre does have this much capacity), it didn't seem likely - especially since other servers at the datacentre were unaffected. | |||

[[File:99ee2017dca43f92b26b5eb58a200d11.png]] | |||

In addition, while OVH's anti-ddos system did kick in, it automatically disengaged after a few minutes. | |||

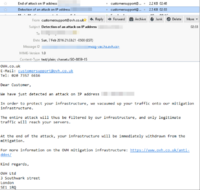

[[File:Bbe68f3b0955756874a502c21b1701c8.png|200px|thumb]] | |||

It took a long time and a lot of discussion with the technicians at the datacentre to finally work out what happened, but the long and short of it... this was an ''internal attack'' from thousands of compromised servers in the same datacentre, and specifically targeted a certain configuration we use. | |||

The datacentre we use is ran by OVH, which is the largest server company in the world. They have something like 200,000 servers in the same datecentre we use, and as is always true in public datacentres, many of those boxes were compromised, and were used to launch the attack. | |||

Internally, we use NFS, encrypted using Kerberos, and that runs over a particular port via a vRack (virtual rack), on secondary ethernet adapters. The attack was a UDP broadcast, reflection and amplification attack. While our systems weren't specifically vulnerable to this attack vector (i.e they couldn't use our servers to amplify their attack on others), they were able to overload our internal ethernet connections and effectively render our services offline. | |||

This wasn't detected quickly by the datacentre technicians, and wasn't mitigated by the OVH anti-ddos system, because it was an internal attack and this vector wasn't being properly monitored. | |||

The standard monitoring that we have in place on our servers (ping, http) never detected a problem either, because these services were up.. it was the internal connection between our servers which was affected. | |||

The good news is that this particular attack vector is permanently closed now, because we have simply blocked any other internal hosts which don't belong to us at the router level (OVH very conveniently allows you to add firewall rules to their internal routing). | |||

Were we specifically targeted? It's unclear. It wasn't only us that was affected, several other hosts who also utilise NFS were taken offline too, though it was not a widespread issue. | |||

What '''is''' slightly odd, though, is that at the same time we received notice from another host, that they had received an attack on a domain name which I personally own, but hasn't been in use for ten years. This may be a coincidence, I'm not sure. | |||

[[File:61a5ca443d1521b7d8bdcc96c1b08eec.png|200px|thumb]] | |||

If you've read this far and you haven't already tuned out, you probably know that a DDoS attack is not compromising, it's just a denial of service. Our security was not broken. | |||

Thanks to our failsafes, CasperVend was able to fully recover all transactions which occurred during that period. However, unfortunately CasperLet's failsafes did not work, and all CasperLet payments over that period were lost. We've already completed work to fix this problem, and if anything like this occurs again, all CasperLet payments should be recovered once the servers come back online. | |||

We sincerely apologise for the inconvenience caused by this outage. We understand that you depend on our reliability, and we are proud to remain the largest and most dependable provider even taking this outage into account. | |||

~Casper | |||

== 2015-12-28 == | |||

'''Affected:''' CasperVend, CasperLet | |||

'''Disruption length:''' 31 minutes | |||

'''Service:''' Severely degraded | |||

'''Fault status page:''' N/A - Internal | |||

'''Lost transactions:''' CasperVend: None. CasperLet: None, providing in-world scripts are up-to-date. | |||

We were performing maintenance on a database table, in order to increase the size of the "inventory name" field used for associating items with CasperVend. This is a huge operation which takes hours (it's a 35gb table). | |||

With operations like this, we normally direct all database traffic to a single server, while we work on another. Unfortunately, on this occasion, we forgot to stop the replication, and so the operation automatically moved to the next server (which was the selected "production" server, handling all the traffic), which in turn caused the table to lock, which in turn caused the connections to build up and eventually the server stopped accepting connections. | |||

The outage began at 4:52PM SLT. Norsk found the issue and reported it to Casper at 5:21PM, and it was fixed at 5:23PM. | |||

Due to our failovers, no CasperVend transactions were lost. Those using the very latest rental script (v1.32) should have also seen no issues. Those using older scripts may have experienced lost sales. | |||

We apologise for this incident (which was our fault) and we will be reviewing our processes to ensure that this doesn't occur again. | |||

== 2015-11-02 == | |||

'''Affected:''' All Services | '''Affected:''' All Services | ||

| Line 8: | Line 475: | ||

'''Fault status page:''' http://status.ovh.com/?do=details&id=11304 | '''Fault status page:''' http://status.ovh.com/?do=details&id=11304 | ||

'''Lost transactions:''' Minimal. Our critical processes were maintained. | |||

At approximately 9:09 AM Second Life time on the 2nd of November, 2015, our hosting partners suffered a fiber cut, in a tunnel a few kilometers from the datacentre. This severed '''all''' of the datacenter's routes, with the exception of one very slow backup line. | At approximately 9:09 AM Second Life time on the 2nd of November, 2015, our hosting partners suffered a fiber cut, in a tunnel a few kilometers from the datacentre. This severed '''all''' of the datacenter's routes, with the exception of one very slow backup line. | ||

| Line 25: | Line 494: | ||

This means that we now have two physically redundant routes to the datacentre, which means that any future fiber cut is very unlikely to cause a disruption in service. | This means that we now have two physically redundant routes to the datacentre, which means that any future fiber cut is very unlikely to cause a disruption in service. | ||

== 28 | == 2015-05-28 == | ||

'''Affected:''' All Services | '''Affected:''' All Services | ||

| Line 34: | Line 503: | ||

'''Fault status page:''' http://status.ovh.com/?do=details&id=9603 | '''Fault status page:''' http://status.ovh.com/?do=details&id=9603 | ||

'''Lost transactions:''' Minimal. Our critical processes were maintained. | |||

At approximately 6pm SL time, a car collided with a telegraph pole belonging to our hosting partner, which severed connectivity to CasperTech services. The pole was located between the datacentre and montreal. | At approximately 6pm SL time, a car collided with a telegraph pole belonging to our hosting partner, which severed connectivity to CasperTech services. The pole was located between the datacentre and montreal. | ||

We were aware of the problem immediately, and were on the phone right away with the datacentre to discuss the problem. | |||

While the datacentre does have several redundant pipes, they are all currently running along a single physical route. This is clearly unacceptable and we have contacted the host in order to establish what their redundancy plan is for the future. | While the datacentre does have several redundant pipes, they are all currently running along a single physical route. This is clearly unacceptable and we have contacted the host in order to establish what their redundancy plan is for the future. | ||

Latest revision as of 18:23, 29 February 2024

2024-02-27

Affected: All services

Disruption length: 30 minutes

Service: Web only

Fault status page: N/A

Lost transactions: None, only websites affected

We detected unusual activity on our ticket system, and discovered a flaw which could allow an attacker to view the last 10 items that an avatar had received (whether through purchases or bought for them as a gift). Immediately after detecting the issue, the websites were taken offline while a fix was implemented. The downtime was 30 minutes.

No other information about customers was disclosed by this defect.

2020-11-07

Affected: All services

Disruption length: 16 hours 13 minutes

Service: Full outage

Fault status page: N/A

Lost transactions: Most transactions recovered.

At 11:07PM SLT on Saturday, the datacentre complex which hosts our servers experienced what they called a "power incident", preventing our servers from communicating with each other over the internal network.

Initially the technicians hoped that the switches just needed to be rebooted, but after diagnosis it transpired that a significant amount of equipment needed to be replaced. Some components were not available, and due to COVID the datacentre are running a skeleton crew, so the repair effort was slow.

All of our servers remained powered on during the outage, but were unable to communicate with each other which prevented the API servers from accessing the database, thereby rendering all of our services offline.

Service was restored at 3:20PM SLT on Sunday, unfortunately making this the longest period of downtime we've ever experienced. When the network was restored everything immediately started working, with the exception of one of our MongoDB nodes which was automatically taken out of rotation. This node has now been fully restored. All outstanding transactions have now finished processing. Anything which is still missing can be safely refunded / adjusted / accounted for.

We have redundancy throughout our system, and we can lose half of our servers and still remain online, but unfortunately we are entirely dependent on the private internal network and since it's a single point of failure, I'll be talking with the datacentre about ways to avoid situations like this in the future.

Our infrastructure has been extremely reliable in the last few years, with the last significant outage dating back to 2018. We apologise for the inconvenience caused by this outage, but we are confident that we can continue to deliver an exceptionally reliable service going forward.

2018-11-25

Affected: All services

Disruption length: 7 hours 45 minutes

Service: Full outage

Fault status page: None yet

Lost transactions: None - All transactions recovered.

Services went down at approximately 8:15pm on 2018-11-24 and weren't recovered until 4am.

This incident appears to have been caused by a MongoDB bug. All three of our Mongo servers crashed simultaneously as WiredTigerLAS.wt suddenly ballooned from 4kb to 800gb.

This does appear to be a rare but known issue with MongoDB ( https://goo.gl/A7msRf ) and we're working with the developers to stop this from happening again.

Our off-grid transaction processor has done its job and is restoring all transactions that occurred during this period.

I'm sincerely sorry for the disruption caused by this incident.

2018-11-09

Affected: All services

Disruption length: 4 hours 15 minutes

Service: Full outage

Fault status page: None yet

Lost transactions: None - All transactions recovered.

At approximately 10:45PM on the 9th of November, 2018 (Second Life Time), our vRack configuration at the datacenter got corrupted and two of our servers got isolated.

The vRack is a "virtual rack" system which connects all our servers together via a virtual internal network. We rely on it for transmission of sensitive information between our 6 core dedicated servers.

This caused a total outage which lasted until 3 AM when we were able to fix the broken configuration, an outage of four hours and 15 minutes.

The hosting company is still investigating what caused our vRack configuration to corrupt. We will post further information here when we have it.

2018-05-26

Affected: All services

Disruption length: 45 minutes

Service: Full outage

Fault status page: http://travaux.ovh.net/?do=details&id=31831

Lost transactions: None. All transactions recovered.

A fault at our host's datacenter caused an outage this morning for 45 minutes, starting at 10:12pm and ending at 10:57pm SLT. Service was restored automatically once the fault was fixed by the datacenter. OVH status ticket: http://travaux.ovh.net/?do=details&id=31831

2018-04-28

Affected: All services

Disruption length: 2.5 hours

Service: Full outage

Fault status page: N/A

Lost transactions: None. All transactions recovered.

At approximately 10:11 PM on the 28th of April, 2018, one of our database servers went offline, causing our website access to be disrupted. The failover kicked in and kept in world service alive, but at 11:06 PM the failover database server went offline too.

Visitors to our websites received a message stating that we were "heavily overloaded" - however this wasn't the case, this is just the generic message that the website code shows when it can't connect to the database.

Service was restored at 01:36 AM.

The cause of this is rather embarrassing, but we operate a policy of full transparency so we owe you the honest truth.

Last month we acquired two new massive servers to power our database, "Grunt" and "Sandman". However, when setting up the machines, I completely neglected to set up automatic billing - so basically the servers were unpaid and were suspended.

I am truly sorry for this outage, it shouldn't have happened - it's never happened before and will not happen again!

~Casper

2017-06-14

Affected: All services

Disruption length: 4 hours

Service: Full outage

Fault status page: N/A

Lost transactions: None, though some may have not appeared on the sales pages.

At approximately 9:30pm SL time on the 14th of June, 2017, we experienced an outage which impacted all of our servers.

The root cause of the outage was severe packet loss on our internal vRack (internal routing between our servers). This resulted in file locking issues on our NFS storage cluster - file locks were not released, causing all of our services to grind to a halt. At around 1:30am, service was restored by restarting the system.

The NFS file locking issue also, unfortunately, caused a few vendors to revert to the default profile. As a result of the kind efforts of many of our users, we were able to find the cause of this and correct the logic issue.

The use of NFS isn't ideal, but so far has been the only thing which has been capable of keeping up with our load.

As a result of this outage, we're accelerating our deployment schedule for the next-gen casperdns service. This involves deployment of the new storage cluster code we've been working on since March.

Please see Project Spectre.

We sincerely apologise for any inconvenience caused. Please know that we are very aware how frustrating these outages are. We're working to bring you a much more reliable platform.

2017-05-11

Affected: CasperVend only, only some customers

Disruption length: 25 minutes

Service: Partial outage

Fault status page: N/A

Lost transactions: None

At approximately 18:45 SL time on the 11th of May, 2017, one of our servers experienced a kernel panic. The problem was noticed by datacenter technicians and full service was restored at 19:10.

Total downtime was 25 minutes.

Unfortunately, however, any vendors which had reset during this period were stuck on an offline profile, and were only fixable with a reset (or a region restart). This is why some were still reporting issues after the service came back online.

I'm looking into why the vendors got stuck in the offline profile, this shouldn't have happened - they are supposed to automatically re-establish themselves.

I will post an update here when this has been fully investigated.

I apologise for any inconvenience caused.

2017-04-26

Affected: All services except CasperSafe

Disruption length: 29 minutes

Service: Partial outage

Fault status page: http://status.ovh.co.uk/?do=details&id=14548

Lost transactions: None

At 7:50AM SLT on the 26th of April, 2016, OVH (our hosts) suffered a power glitch which severed our database servers from the rest of the network. Both the primary and the secondary database servers were affected.

Power was shortly restored at 8:06 for just two minutes, before going down again.

Service was finally restored at 8:21AM SLT.

Total downtime 29 minutes.

Note that our servers themselves did not lose power, only some intermediate networking equipment.

No transactions should have been lost during this incident, as our transaction servers remained online.

March 2017 Chaos (aka "the month that Casper broke SL")

We had two large outages during March 2017, and one smaller one. They were all somewhat (but not directly) related.

Please know that I completely understand that you depend on us, and I give this message with humility, respect and gratitude to our loyal customers, friends in the community, and particularly our support volunteers who pulled all-nighters during both of the extended outage periods, staying awake all night to try to answer questions and to make sure that our affected customers were not ignored. I owe you all a great deal.

I have given a technical description of the issues below, however, for those who are not so technical, it runs down as follows:

- We were attacked on the 2nd/3rd of March by someone unknown.

- Some of the fixes provided by the datacentre malfunctioned and caused the extended downtime on the 15th/16th and 17th of March.

Please know that I am not trying to shift blame, only give you an accurate description of the problems. I accept full responsibility for the failures.

That being said, this kind of thing does not happen a lot. You may not have heard about outages from similar operators in Second Life, but the fact is that they generally do not publish them, as we do. I believe in openness.

I would like to personally apologise for the disruption, and I want to make it very clear that I am committed to doing better in the future. I know that you rightly expect it.

~Casper

Important: Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.

2017-03-17

Affected: All services

Disruption length: <1 hour

Service: Partial outage

Fault status page: N/A

Lost transactions: None reported

At approximately 3:30AM SLT on the 17th of March, an issue identical to the 2017-03-15 outage struck. This time we were able to find and fix the fault while it was still present.

You are all owed an explanation, so I will try to be as thorough as possible.

During the DDoS attack on the 2nd of March, we made many configuration changes in order to bring things back online as soon as we could. The attackers were being persistent and malicious, so we had to do what we could to mitigate. Part of this was implementation of OVH's global IP firewall (we have previously only used rented cisco firewalls, but these were being overloaded).

Following the attack, CasperLet had some lag and reliability issues. We traced this to an issue with DNS lookups failing on Badwolf, one of the servers that power CasperLet, and further, we found that it was the OVH global IP firewall which was causing these DNS lookups to fail. No problem, we disabled the firewall on Badwolf and reported it to OVH engineers. The other servers were still firewalled, but had no such DNS lookup problems.

The cause of this latest outage, and the outage on the 15th, was that this problem "spread" to the other servers, which were still using the OVH firewall. The firewall configurations for the servers are set to explicitly permit UDP traffic on port 53 (DNS), so this is some stupid bug that has been introduced into the OVH firewall.

We have now disabled the global IP firewall with OVH and implemented our own measures to protect our servers instead.

I have had a very heated discussion with OVH today about this. They refuse to accept that their firewall is malfunctioning, despite the very clear evidence that it is. I am not happy. I will update this post once they have provided a satisfactory answer.

Important: Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.

2017-03-15

Affected: All services

Disruption length: ~8 hours

Service: Total outage

Fault status page: N/A

Lost transactions: CasperVend: 5% CasperLet: 5% (v1.40 only)

At approximately 17:50 SLT on the 15th of March, our services became unreliable and soon went down entirely.

This (as with the previous downtime on the 2nd) occurred while I was away from home and just after I went to bed. This lead many to suspect that it was another DDoS attack, however, this was not the case.

When I awoke at approximately 1am SLT, I immediately investigated but found that the original cause was already gone (there were some contention issues which were fixed by a server reboot). Because of this, I assumed that the issue was triggered by a power outage in the same rack that our servers are in, as announced on the OVH status page.

However, this was not genuinely the cause. We discovered the real cause during the next downtime (see above).

Important: Unfortunately, due to an indirectly related issue, a small number of in-world vendors may have lost their configuration during this downtime. Please check your in-world units to make sure that their configuration is intact. We have already repaired this issue and apologise for the inconvenience.

2017-03-02

Affected: All services

Disruption length: ~12 hours

Service: Total outage

Fault status page: N/A

Lost transactions: CasperVend: 30% CasperLet: 50%

On the 2nd of March at around 10:30am PDT, our services started to become unstable. I (Casper) was notified, but wasn't at my desk. I was able to restore service by restarting the servers. This happened a few more times, causing minimal disruption and lasting 20 minutes or so. I finally got to my desk at around 4pm SLT, and checked the servers to make sure they were healthy. Everything seemed fine, but I wasn't able to see what actually caused the issues.

It turns out that these small pockets of disruption were testing runs by attackers.

At approximately 17:45 SLT, a sustained DDoS attack began, taking our services offline completely. This was not a usual DDoS attack (those are now completely mitigated by our hosting provider automatically), but it was something known as a slow loris attack. This type of attack is unusual, because our servers were operating just fine, with low load, and no obvious resource issues, so no alarms were triggered and the datacentre technicians were not alerted. Unfortunately, this occurred just after I had gone to bed (which was likely not a coincidence, but that is merely speculation).

Here is the timeline:

[17:45 SLT] - Attack began

[~19:00 SLT] - Due to a lack of information, it was GUESSED that the outage was related to some OVH issues, but this was later found to be unrelated.

[00:30 SLT] - Casper woke and immediately began investigating

[00:45 SLT] - Casper began work to separate the CasperVend and CasperLet sites away from our inworld systems, so that our in-world stuff can get back online.

[01:00 SLT] - Deliveries start to trickle through

[01:20 SLT] - Attack vector shifts to target the webservers rather than the PHP scripts. Deliveries stop again.

[02:30 SLT] - Blocked all IP addresses except for Second Life IPs. All inworld systems back online. Websites offline to protect other systems.

[03:30 SLT] - Attack vector shifts to combined DNS amplification and SYN-flood attack against our public IPs. OVH anti-ddos triggers and vacuums up the traffic, but also blocks a lot of legit traffic (partial disruption)

[05:12 SLT] - With the help of OVH engineers, we started to bring services back online despite the ongoing attack, starting with CasperLet

[05:27 SLT] - CasperVend back online

- By this stage, our inworld services were working again. Total downtime 12 hours -

[06:00 SLT] - The websites were brought back online

[07:00 SLT] - We completed recovery of lost transactions

[08:34 SLT] - Websites go down again after another attack vector shift. Inworld services remain online and protected.

[10:00 SLT] - We manually reboot our servers to apply some kernel and software patches to mitigate the new attack vector.

[10:11 SLT] - All services restored

[11:47 SLT] - "All clear" announced

Even after the "All Clear" was announced, the attack continued, but wasn't able to penetrate our newly configured defences. The attack stopped at roughly 2pm SLT.

2016-06-12

Affected: All services

Disruption length: ~4-6 hours

Service: Severely delayed transactions

Fault status page: N/A

Lost transactions: CasperVend: None. CasperLet: None (if up to date).

At approximately 00:29 SL Time on the 12th of June, 2016, one of our storage servers ran out of inodes. While the machine still had 60% free disk space, there were effectively too many files (about 6.5 billion), and this prevented any further writes. Unfortunately, since we weren't monitoring inode count (just disk space) no alert or alarm was triggered and our failover did not kick in. The other servers did their intended job and saved all their received transactions locally, so zero transactions were lost, they were just severely delayed.

We were able to start diagnosing the issue at approximately 04:30 SL Time, and by 05:00 we had restored partial service. However, the service was not fully restored until around 6:30AM.

The all clear was given at 8:15 AM.

We sincerely apologise for this outage, and we are taking steps to make sure it never happens again. We hope that our complete transparency regarding this issue, coupled with the fact that our backup systems ensured your transactions were recovered, will reinforce the fact that we are absolutely committed to ensuring that you can rely on our service.

2016-02-11

Affected: All services

Disruption length: 10 minutes

Service: Total outage

Fault status page: http://status.ovh.net/?do=details&id=12169 (http://travaux.ovh.net/?do=details&id=16568)

Lost transactions: CasperVend: None. CasperLet: None (if up to date).

At approximately 7:55 on the 11th of February, Russian ISP GlobalNet.ru issued a faulty BGP announce (a BGP leak).

Thanks to one of our users for very eloquently describing: "BGP = Border Gateway Protocol. it's how routers of neighboring networks advertise which routes are most efficient. something advertises the wrong route, is like Google Maps telling everyone to skip the freeway and use an alley instead."

This affected thousands of hosts around the internet (basically, anybody who peers with GlobalNet, which is a lot of people).

However, our datacentre was the first to respond to the incident, and we were back online within a few minutes.

2016-02-10 - Planned maintenance

We shut down for approximately 10 minutes at 4:30AM (Second Life Time) on the 10th of February.

This was a planned maintenance window, with two goals:

- To apply some kernel patches to help mitigate DDoS attacks in the future

- To test the new CasperLet failsafe mechanism in a controlled manner.

The maintenance completed successfully.

2016-02-08

Affected: All services

Disruption length: 6 hours

Service: Severely degraded

Fault status page: N/A - Internal

Lost transactions: CasperVend: None. CasperLet: All transactions lost during this period - see information below.

Short version for technophobes: We were attacked. Nothing was compromised, we are secure, they just overloaded us. We've implemented fixes to make sure this doesn't happen again, and we've already fixed the problem which meant CasperLet transactions were lost. Sorry for the inconvenience.

Now for the geeky stuff

At approximately 6:30PM Second Life time, our servers were hit with a DDoS attack. Unfortunately, the attack was undetected by the technicians at the datacentre (I'll explain why a bit later), and it occurred at a time when myself and my staff were not available.

I discovered the issue at 1AM Second Life time, and since the traffic was all coming from the same subnet, I was able to block the attack at the firewall very quickly, and bring our services back online.

This attack was very unusual in a few ways. Firstly, the MTRG graph showed that the amount of traffic peaked at a ludicrous 785gbps, and had a sustained average of around 600gbps. This would make the attack one of the largest ever recorded in internet history, and while technically possible (the datacentre does have this much capacity), it didn't seem likely - especially since other servers at the datacentre were unaffected.

In addition, while OVH's anti-ddos system did kick in, it automatically disengaged after a few minutes.

It took a long time and a lot of discussion with the technicians at the datacentre to finally work out what happened, but the long and short of it... this was an internal attack from thousands of compromised servers in the same datacentre, and specifically targeted a certain configuration we use.

The datacentre we use is ran by OVH, which is the largest server company in the world. They have something like 200,000 servers in the same datecentre we use, and as is always true in public datacentres, many of those boxes were compromised, and were used to launch the attack.

Internally, we use NFS, encrypted using Kerberos, and that runs over a particular port via a vRack (virtual rack), on secondary ethernet adapters. The attack was a UDP broadcast, reflection and amplification attack. While our systems weren't specifically vulnerable to this attack vector (i.e they couldn't use our servers to amplify their attack on others), they were able to overload our internal ethernet connections and effectively render our services offline.

This wasn't detected quickly by the datacentre technicians, and wasn't mitigated by the OVH anti-ddos system, because it was an internal attack and this vector wasn't being properly monitored.

The standard monitoring that we have in place on our servers (ping, http) never detected a problem either, because these services were up.. it was the internal connection between our servers which was affected.

The good news is that this particular attack vector is permanently closed now, because we have simply blocked any other internal hosts which don't belong to us at the router level (OVH very conveniently allows you to add firewall rules to their internal routing).

Were we specifically targeted? It's unclear. It wasn't only us that was affected, several other hosts who also utilise NFS were taken offline too, though it was not a widespread issue.

What is slightly odd, though, is that at the same time we received notice from another host, that they had received an attack on a domain name which I personally own, but hasn't been in use for ten years. This may be a coincidence, I'm not sure.

If you've read this far and you haven't already tuned out, you probably know that a DDoS attack is not compromising, it's just a denial of service. Our security was not broken.

Thanks to our failsafes, CasperVend was able to fully recover all transactions which occurred during that period. However, unfortunately CasperLet's failsafes did not work, and all CasperLet payments over that period were lost. We've already completed work to fix this problem, and if anything like this occurs again, all CasperLet payments should be recovered once the servers come back online.

We sincerely apologise for the inconvenience caused by this outage. We understand that you depend on our reliability, and we are proud to remain the largest and most dependable provider even taking this outage into account.

~Casper

2015-12-28

Affected: CasperVend, CasperLet

Disruption length: 31 minutes

Service: Severely degraded

Fault status page: N/A - Internal

Lost transactions: CasperVend: None. CasperLet: None, providing in-world scripts are up-to-date.

We were performing maintenance on a database table, in order to increase the size of the "inventory name" field used for associating items with CasperVend. This is a huge operation which takes hours (it's a 35gb table).

With operations like this, we normally direct all database traffic to a single server, while we work on another. Unfortunately, on this occasion, we forgot to stop the replication, and so the operation automatically moved to the next server (which was the selected "production" server, handling all the traffic), which in turn caused the table to lock, which in turn caused the connections to build up and eventually the server stopped accepting connections.

The outage began at 4:52PM SLT. Norsk found the issue and reported it to Casper at 5:21PM, and it was fixed at 5:23PM.

Due to our failovers, no CasperVend transactions were lost. Those using the very latest rental script (v1.32) should have also seen no issues. Those using older scripts may have experienced lost sales.

We apologise for this incident (which was our fault) and we will be reviewing our processes to ensure that this doesn't occur again.

2015-11-02

Affected: All Services

Disruption length: 5 hours

Service: Severely degraded

Fault status page: http://status.ovh.com/?do=details&id=11304

Lost transactions: Minimal. Our critical processes were maintained.

At approximately 9:09 AM Second Life time on the 2nd of November, 2015, our hosting partners suffered a fiber cut, in a tunnel a few kilometers from the datacentre. This severed all of the datacenter's routes, with the exception of one very slow backup line.

We were aware of the problem immediately, and were in touch with the datacentre within minutes. They provisioned a special route for us which we could use for absolutely essential traffic only, flowing over the one remaining backup line.

The result of this was that all of our services were severely degraded. However, we were prioritising transaction and payment traffic over the backup route, so thankfully we were still processing transactions (around 10,000 transactions were processed during the outage, with roughly a 95% success rate).

The datacentre has four main trunks over two distinct physical paths, two heading north towards Montreal, and two heading south towards Newark. Following a similar outage that affected us in May, the two southern links had not yet been established (mostly due to a long four-year beurocratic process involved with laying cable over the international border between the US and canada). At the time, OVH (the hosts) assured us that they would have the new link to Newark in place by September.

Unfortunately, these new redundant southern routes were not in place yet. But by pure coincidence, they were only a couple of days away from being installed. Since the new routes were so close to being finalised, they were able to rush them into service. At 2pm SL time, the uplink was established and CasperTech services were restored.

We declared "ALL CLEAR" at 5:19pm SL time, once the datacenter had confirmed that the new routes were stable.

The links which had been severed were finally spliced together and restored at 9:10pm SL time.

This means that we now have two physically redundant routes to the datacentre, which means that any future fiber cut is very unlikely to cause a disruption in service.

2015-05-28

Affected: All Services

Disruption length: 1 hour 30 minutes

Outage: Degraded

Fault status page: http://status.ovh.com/?do=details&id=9603

Lost transactions: Minimal. Our critical processes were maintained.

At approximately 6pm SL time, a car collided with a telegraph pole belonging to our hosting partner, which severed connectivity to CasperTech services. The pole was located between the datacentre and montreal.

We were aware of the problem immediately, and were on the phone right away with the datacentre to discuss the problem.

While the datacentre does have several redundant pipes, they are all currently running along a single physical route. This is clearly unacceptable and we have contacted the host in order to establish what their redundancy plan is for the future.

We have been assured that this situation will be improved as early as July, and will be completely solved by September, when their southern route from Newark will be installed.

Service was restored at approximately 7:30pm SL time.