Outages: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

'''Service:''' Total outage | '''Service:''' Total outage | ||

'''Fault status page:''' http://travaux.ovh.net/?do=details&id=16568 | '''Fault status page:''' http://status.ovh.net/?do=details&id=12169 (http://travaux.ovh.net/?do=details&id=16568) | ||

'''Lost transactions:''' CasperVend: None. CasperLet: None (if up to date). | '''Lost transactions:''' CasperVend: None. CasperLet: None (if up to date). | ||

Revision as of 16:41, 11 February 2016

11/02/2016

Affected: All services

Disruption length: 10 minutes

Service: Total outage

Fault status page: http://status.ovh.net/?do=details&id=12169 (http://travaux.ovh.net/?do=details&id=16568)

Lost transactions: CasperVend: None. CasperLet: None (if up to date).

At approximately 7:55 on the 11th of February, Russian ISP GlobalNet.ru issued a faulty BGP announce (a BGP leak).

Thanks to one of our users for very eloquently describing: "BGP = Border Gateway Protocol. it's how routers of neighboring networks advertise which routes are most efficient. something advertises the wrong route, is like Google Maps telling everyone to skip the freeway and use an alley instead."

This affected thousands of hosts around the internet (basically, anybody who peers with GlobalNet, which is a lot of people).

However, our datacentre was the first to respond to the incident, and we were back online within a few minutes.

10/02/2016 - Planned maintenance

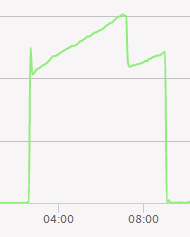

We shut down for approximately 10 minutes at 4:30AM (Second Life Time) on the 10th of February.

This was a planned maintenance window, with two goals:

- To apply some kernel patches to help mitigate DDoS attacks in the future

- To test the new CasperLet failsafe mechanism in a controlled manner.

The maintenance completed successfully.

08/02/2016

Affected: All services

Disruption length: 6 hours

Service: Severely degraded

Fault status page: N/A - Internal

Lost transactions: CasperVend: None. CasperLet: All transactions lost during this period - see information below.

Short version for technophobes: We were attacked. Nothing was compromised, we are secure, they just overloaded us. We've implemented fixes to make sure this doesn't happen again, and we've already fixed the problem which meant CasperLet transactions were lost. Sorry for the inconvenience.

Now for the geeky stuff

At approximately 6:30PM Second Life time, our servers were hit with a DDoS attack. Unfortunately, the attack was undetected by the technicians at the datacentre (I'll explain why a bit later), and it occurred at a time when myself and my staff were not available.

I discovered the issue at 1AM Second Life time, and since the traffic was all coming from the same subnet, I was able to block the attack at the firewall very quickly, and bring our services back online.

This attack was very unusual in a few ways. Firstly, the MTRG graph showed that the amount of traffic peaked at a ludicrous 785gbps, and had a sustained average of around 600gbps. This would make the attack one of the largest ever recorded in internet history, and while technically possible (the datacentre does have this much capacity), it didn't seem likely - especially since other servers at the datacentre were unaffected.

In addition, while OVH's anti-ddos system did kick in, it automatically disengaged after a few minutes.

It took a long time and a lot of discussion with the technicians at the datacentre to finally work out what happened, but the long and short of it... this was an internal attack from thousands of compromised servers in the same datacentre, and specifically targeted a certain configuration we use.

The datacentre we use is ran by OVH, which is the largest server company in the world. They have something like 200,000 servers in the same datecentre we use, and as is always true in public datacentres, many of those boxes were compromised, and were used to launch the attack.

Internally, we use NFS, encrypted using Kerberos, and that runs over a particular port via a vRack (virtual rack), on secondary ethernet adapters. The attack was a UDP broadcast, reflection and amplification attack. While our systems weren't specifically vulnerable to this attack vector (i.e they couldn't use our servers to amplify their attack on others), they were able to overload our internal ethernet connections and effectively render our services offline.

This wasn't detected quickly by the datacentre technicians, and wasn't mitigated by the OVH anti-ddos system, because it was an internal attack and this vector wasn't being properly monitored.

The standard monitoring that we have in place on our servers (ping, http) never detected a problem either, because these services were up.. it was the internal connection between our servers which was affected.

The good news is that this particular attack vector is permanently closed now, because we have simply blocked any other internal hosts which don't belong to us at the router level (OVH very conveniently allows you to add firewall rules to their internal routing).

Were we specifically targeted? It's unclear. It wasn't only us that was affected, several other hosts who also utilise NFS were taken offline too, though it was not a widespread issue.

What is slightly odd, though, is that at the same time we received notice from another host, that they had received an attack on a domain name which I personally own, but hasn't been in use for ten years. This may be a coincidence, I'm not sure.

If you've read this far and you haven't already tuned out, you probably know that a DDoS attack is not compromising, it's just a denial of service. Our security was not broken.

Thanks to our failsafes, CasperVend was able to fully recover all transactions which occurred during that period. However, unfortunately CasperLet's failsafes did not work, and all CasperLet payments over that period were lost. We've already completed work to fix this problem, and if anything like this occurs again, all CasperLet payments should be recovered once the servers come back online.

We sincerely apologise for the inconvenience caused by this outage. We understand that you depend on our reliability, and we are proud to remain the largest and most dependable provider even taking this outage into account.

~Casper

28/12/2015

Affected: CasperVend, CasperLet

Disruption length: 31 minutes

Service: Severely degraded

Fault status page: N/A - Internal

Lost transactions: CasperVend: None. CasperLet: None, providing in-world scripts are up-to-date.

We were performing maintenance on a database table, in order to increase the size of the "inventory name" field used for associating items with CasperVend. This is a huge operation which takes hours (it's a 35gb table).

With operations like this, we normally direct all database traffic to a single server, while we work on another. Unfortunately, on this occasion, we forgot to stop the replication, and so the operation automatically moved to the next server (which was the selected "production" server, handling all the traffic), which in turn caused the table to lock, which in turn caused the connections to build up and eventually the server stopped accepting connections.

The outage began at 4:52PM SLT. Norsk found the issue and reported it to Casper at 5:21PM, and it was fixed at 5:23PM.

Due to our failovers, no CasperVend transactions were lost. Those using the very latest rental script (v1.32) should have also seen no issues. Those using older scripts may have experienced lost sales.

We apologise for this incident (which was our fault) and we will be reviewing our processes to ensure that this doesn't occur again.

02/11/2015

Affected: All Services

Disruption length: 5 hours

Service: Severely degraded

Fault status page: http://status.ovh.com/?do=details&id=11304

Lost transactions: Minimal. Our critical processes were maintained.

At approximately 9:09 AM Second Life time on the 2nd of November, 2015, our hosting partners suffered a fiber cut, in a tunnel a few kilometers from the datacentre. This severed all of the datacenter's routes, with the exception of one very slow backup line.

We were aware of the problem immediately, and were in touch with the datacentre within minutes. They provisioned a special route for us which we could use for absolutely essential traffic only, flowing over the one remaining backup line.

The result of this was that all of our services were severely degraded. However, we were prioritising transaction and payment traffic over the backup route, so thankfully we were still processing transactions (around 10,000 transactions were processed during the outage, with roughly a 95% success rate).

The datacentre has four main trunks over two distinct physical paths, two heading north towards Montreal, and two heading south towards Newark. Following a similar outage that affected us in May, the two southern links had not yet been established (mostly due to a long four-year beurocratic process involved with laying cable over the international border between the US and canada). At the time, OVH (the hosts) assured us that they would have the new link to Newark in place by September.

Unfortunately, these new redundant southern routes were not in place yet. But by pure coincidence, they were only a couple of days away from being installed. Since the new routes were so close to being finalised, they were able to rush them into service. At 2pm SL time, the uplink was established and CasperTech services were restored.

We declared "ALL CLEAR" at 5:19pm SL time, once the datacenter had confirmed that the new routes were stable.

The links which had been severed were finally spliced together and restored at 9:10pm SL time.

This means that we now have two physically redundant routes to the datacentre, which means that any future fiber cut is very unlikely to cause a disruption in service.

28/05/2015

Affected: All Services

Disruption length: 1 hour 30 minutes

Outage: Degraded

Fault status page: http://status.ovh.com/?do=details&id=9603

Lost transactions: Minimal. Our critical processes were maintained.

At approximately 6pm SL time, a car collided with a telegraph pole belonging to our hosting partner, which severed connectivity to CasperTech services. The pole was located between the datacentre and montreal.

We were aware of the problem immediately, and were on the phone right away with the datacentre to discuss the problem.

While the datacentre does have several redundant pipes, they are all currently running along a single physical route. This is clearly unacceptable and we have contacted the host in order to establish what their redundancy plan is for the future.

We have been assured that this situation will be improved as early as July, and will be completely solved by September, when their southern route from Newark will be installed.

Service was restored at approximately 7:30pm SL time.